Hi!

I’m Jennifer, Class of 2018. I was a Biological Sciences Major and I’ve just started working in the HCI lab as a research fellow. This November, I travelled to Jersey City, New Jersey, with a Wellesley College delegation to attend the 21st ACM Conference on Computer-Supported Cooperative Work and Social Computing (CSCW).

The venue at the Hyatt Hotel on the Hudson River was well chosen. It had a stunning backdrop of the Hudson River with New York’s manhattan a short PATH ride away. Colgate’s Clock provided an additional surprise on a darkened horizon, shown below.

The conference was well attended but I didn’t find it overwhelming – a welcome observation considering that it was my first time attending a workshop and conference. I had an eye-opening experience, especially with the Sunday Workshop on “Social Issues in Personal Informatics: Design, Data, and Infrastructure”.

The workshop began with a round of introductions – names, affiliations, and an introduction to our projects.

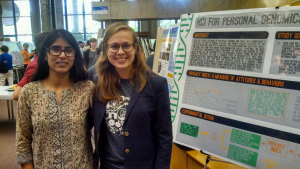

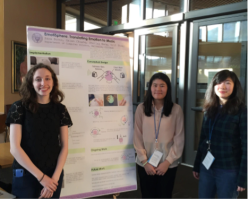

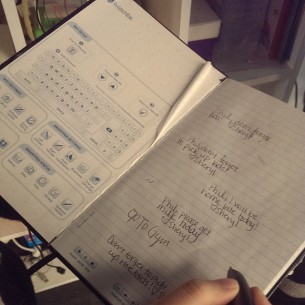

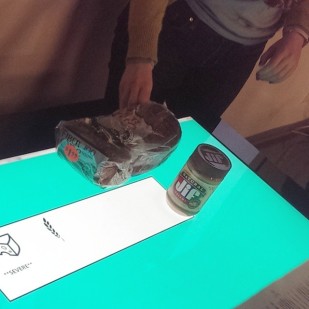

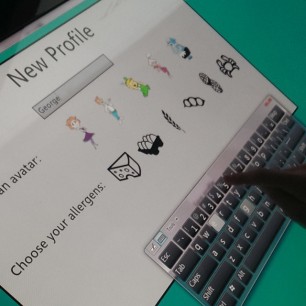

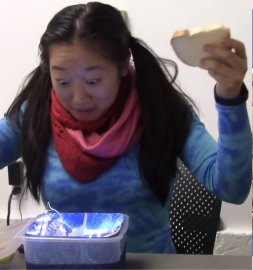

I presented an NSF funded project that is intended to help users capture, visualize, and engage with social omic data. Omic data is a comprehensive analysis of genetic and molecular profiles of humans and other organisms. There now exists novel methods for rapid DNA testing that allows users to receive omic data not only about their genetic information and their personal microbiome but also about the plants and animals they interact with. These rapid testing methods can detect dangerous allergens, undesired ingredients in processed food, and detect microbes that cause foodborne illnesses. Testing of living surfaces can also provide greater awareness of one’s environmental omic data. This environmental and nutritional omic data is of high social relevance. Consider a family or a group of people who share a living space. These groups are likely to share bacteria and this microbiota can be influenced by shared lifestyle elements such as pets and food. Within these groups, people might want to share information about the presence of allergens and of particular ingredients, compare personal and environmental data, as well as investigate changes in their own and environmental omic data following lifestyle and/or environmental changes (e.g. diet, new pet, seasons, new furniture). However, tools for aggregating, exploring, sharing and collaboratively making sense of such data are currently not available.

During this presentation, I was very nervous but the audience – full of assistant professors, PhD students and post docs were attentive and generous to an inexperienced research fellow eager to learn. The structure of the workshop was pretty small and intimate. We completed two rotating panel style discussions; one in the morning about the many technical dimensions on social engagement and one in the afternoon about ethics, privacy, and the impact of social change over time. These panels were interspersed with presentations related to the nature of social engagement, data representation of networks, ethics, and privacy. The workshop provided the grounds for rich and insightful discussions on data representation of networks and the ethical and social tensions that can arise as these networks become increasingly more complex.

In the middle of this busy day, the workshop lunch at PiggyBack Bar, a southeast fusion waterfront venue, allowed me to take a more personal approach to understanding the motivations and goals of workshop colleagues but it also allowed me to field worthwhile advice from people who have gone the course in academia. By the way, the food was delicious. 10/10, would recommend.

The following day, the conference opened with a keynote from Julia Angwin and Emily Ball, who spoke about the necessity of “adversarial” tech journalism and social computing research as a means to combating the “social climate change” which has been exacerbated by social technology giants such as Facebook, Twitter, etc. Ironically, I had my twitter opened to view the #CSCW as other attendees made their criticisms, agreements, and observations of this dialogue live to other attendants. As someone who neither caught everything they said nor did not know people there enough to directly ask for their inputs, I found that the twitter handle allowed me to engage with others and read their fresh perspectives on the keynote.

Tuesday was the last day I spent at the Conference. I spent the morning session attending talks concerning feedback sense-making, motivations in online communities, disclosure and anonymity on online forums and information sharing. I personally found the disclosure and anonymity forum to be the most useful in terms of how I should view the project I’m now leading as a research fellow. One thing I learned was that as much as going to every single talk is tempting, a premeditated and concentrated approach is my preferred style for conferences. For lunch, Professor Catherine Delcourt arranged a CSCW Wellesley lunch in the hotel. I had spent my time largely alone but It was great being able to connect with the Wellesley presence while at CSCW. This blend of professors, alums, undergrads allowed for a colorful exchange of experience concerning their research paths.

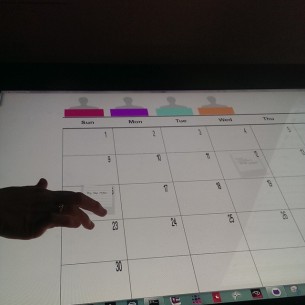

After the end of the lunch, I attended Professor Orit Shaer’s talk on, “Understanding Collaborative Decision-Making Around A Large-Scale Table Top” led by past HCI research fellow, Lauren Westendorf in collaboration with Andrew Kun and Petra Varsanyi, and Hidde van der Meulen of UNH. Thus concluded my 1st CSCW experience and I can say that I found it to be a positive and inspiring experience due to the warmth and passion of the CSCW community, NSF funding, and the support of the HCI lab.

“I was incredibly fortunate to go to my first science conference as a student volunteer, and I had no idea what to expect. As a female undergraduate with no paper or poster to present, I was nervous about proving my place there and not making a fool of myself, even though I factually was a fool compared to the industry researchers and PhD students I met. But surprisingly, once I was at the conference, the gap in knowledge between me and these accomplished people was never a source of intimidation. The other student volunteers were incredibly welcoming and receptive to my questions about their research, never losing patience when I asked questions out of ignorance of computer science (I asked many of these questions). It was amazing to hear about the different projects they were developing, and what they were doing to make widen the spectrum of computer science’s applications, whether it was in medicine or the arts or a classroom setting.

“I was incredibly fortunate to go to my first science conference as a student volunteer, and I had no idea what to expect. As a female undergraduate with no paper or poster to present, I was nervous about proving my place there and not making a fool of myself, even though I factually was a fool compared to the industry researchers and PhD students I met. But surprisingly, once I was at the conference, the gap in knowledge between me and these accomplished people was never a source of intimidation. The other student volunteers were incredibly welcoming and receptive to my questions about their research, never losing patience when I asked questions out of ignorance of computer science (I asked many of these questions). It was amazing to hear about the different projects they were developing, and what they were doing to make widen the spectrum of computer science’s applications, whether it was in medicine or the arts or a classroom setting.

panelists one-on-one. Students were enthusiastic about the readings panelists had suggested and were eager to learn about how to enter the fields of video game design and development. Suggested readings included

panelists one-on-one. Students were enthusiastic about the readings panelists had suggested and were eager to learn about how to enter the fields of video game design and development. Suggested readings included

introduced to a variety of interesting projects and frameworks through the paper talks and demo sessions. Stanford’s campus was definitely one to marvel at, with the red roofs and sandstone colored walls.

introduced to a variety of interesting projects and frameworks through the paper talks and demo sessions. Stanford’s campus was definitely one to marvel at, with the red roofs and sandstone colored walls.

y remote control car. You would drive the smaller one around and the larger one would drive in the same pattern. It was such a simple idea, but it makes so much sense. Rather than having to mentally translate the desired path of the car to the left-right/ forward-back switches of typical toy car remotes or even having to translate it to the motion of a steering wheel, you could just imagine the path of the car and it would happen. One of our other favorite demos was a light-up flower (pictured on the right) that is connected to sensors in the user’s chair. The idea is that the flower sits casually on the user’s desk and droops and turns red when they have bad posture. It’s a fun and ambient reminder to have good posture.

y remote control car. You would drive the smaller one around and the larger one would drive in the same pattern. It was such a simple idea, but it makes so much sense. Rather than having to mentally translate the desired path of the car to the left-right/ forward-back switches of typical toy car remotes or even having to translate it to the motion of a steering wheel, you could just imagine the path of the car and it would happen. One of our other favorite demos was a light-up flower (pictured on the right) that is connected to sensors in the user’s chair. The idea is that the flower sits casually on the user’s desk and droops and turns red when they have bad posture. It’s a fun and ambient reminder to have good posture.

Weather Blender, as it’s name suggests, is a blender that tells the weather. Based on the weather report for the day, Weather Blender produces a smoothie that reflects the forecast. Weather Blender consists of a blender and a container with four compartments that hold different types of fruit. In our configuration, we use strawberries, mango, banana, and blueberries. When the user wants a smoothie, they press a button on the Kinoma, which gets the weather from the web, generates a recipe, and uses motors to control flaps in each container, allowing the correct proportion of each type of fruit into the blender. For example, if the weather is rainy, a blue smoothie is produced. For sunny weather, we chose to use orange. Clouds are banana, and warning weather is strawberry. For example, on a day that is hot and sunny, the smoothie will be mostly orange, with a bit of red.

Weather Blender, as it’s name suggests, is a blender that tells the weather. Based on the weather report for the day, Weather Blender produces a smoothie that reflects the forecast. Weather Blender consists of a blender and a container with four compartments that hold different types of fruit. In our configuration, we use strawberries, mango, banana, and blueberries. When the user wants a smoothie, they press a button on the Kinoma, which gets the weather from the web, generates a recipe, and uses motors to control flaps in each container, allowing the correct proportion of each type of fruit into the blender. For example, if the weather is rainy, a blue smoothie is produced. For sunny weather, we chose to use orange. Clouds are banana, and warning weather is strawberry. For example, on a day that is hot and sunny, the smoothie will be mostly orange, with a bit of red.

The TreadLight Timer is a system that aims to provide more ambient and easily accessible information to someone cooking in a kitchen. The timer leverages the fact that there are really only 3 different states of the timer the cook is interested in: The food is no where near done, the food is almost done, and the food is done. The system uses a string of colored lights around the cooking apparatus to communicate the state of the timer ambiently, reducing the need for the cook to walk around the kitchen to locate a centralized timer and spend time reading and interpreting the numbers on the tiny display.

The TreadLight Timer is a system that aims to provide more ambient and easily accessible information to someone cooking in a kitchen. The timer leverages the fact that there are really only 3 different states of the timer the cook is interested in: The food is no where near done, the food is almost done, and the food is done. The system uses a string of colored lights around the cooking apparatus to communicate the state of the timer ambiently, reducing the need for the cook to walk around the kitchen to locate a centralized timer and spend time reading and interpreting the numbers on the tiny display.